We interviewed StreetDrone’s Self-Driving Software Engineer, Efimia Panagiotaki to discover how they are using Velodyne LiDAR sensors in their platforms for urban autonomous driving.

What is your role at StreetDrone?

I have been working as a Software Engineer for the past 2 years. Currently, I am leading the full stack development of StreetDrone’s self driving software. At StreetDrone we provide an open, end-to-end solution to bring autonomy in complex urban environments.

Where were you before joining StreetDrone?

Previously, I was working as a Data Processing Engineer at the Williams F1 team. I provided race support during the Formula 1 events. Before this and for 3 years whilst studying, I was a member of Formula Student teams. Initially at Prom Racing Team at the NTU Athens. Then at the AMZ Driverless team at ETH Zurich. AMZ Racing is known for setting records at the Formula Student competitions and the Driverless Team. They have won every competition they have participated in since 2017. Formula Student has been a very educational and frankly remarkable experience.

(Mapix technologies are also involved with the Formula Student competition. Sponsoring and mentoring the University of Edinburgh team EUFS. They have won the competition at Silverstone for the past two years.)

What projects are you working on at StreetDrone?

At StreetDrone we develop two drive-by-wire vehicle platforms. The StreetDrone Twizy and the StreetDrone eNV200. Currently, I am working on full stack development and end-to-end integration of our open source software on these vehicles for testing and operation in ‘zone 1’ restrictive metropolitan areas. The LiDAR is the main perceptual sensor we are currently using.

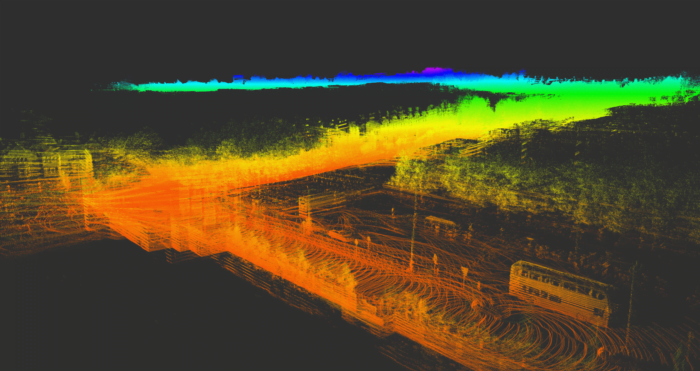

On the Twizy, we are using the Velodyne Puck (VLP-16). On the 7-seater van platform, the eNV200 we use the Velodyne Ultra Puck (VLP-32). These units provide high resolution data at the desired field-of-view for our applications. We are extracting very accurate and dense point clouds of the environment after processing the 3D scans of the sensors.

Other sensors we are using are GPS and IMU for localisation and pose estimation. We use cameras for data collection. A radar is also being used in object detection for redundancy.

How are you using LiDAR with your projects?

The output of the LiDAR is being used throughout our entire perception pipeline. We are using the LiDAR sensors to perform environment reconstruction by extracting detailed point clouds and then using those to create the 3D maps of the area where the autonomous vehicles will be operating. For localisation we are using a robust map-based point cloud matching localisation method to enable the vehicle to accurately estimate its own position. We are also using the LiDAR for object detection and to accurately identify obstacles within the vehicle’s path. After each test or demo run, all of our data are being uploaded to the cloud for further processing or testing.

What are the advantages of using LiDAR?

The main benefit is that the point cloud extracted from this LiDAR is quite dense and the output measurements are very accurate with an insignificant error margin. In terms of the trade-off in accuracy, density and processing power needed, we have found this sensor is the optimal for our application. Overall it is a very reliable and robust perception system.

Another benefit of the LiDAR sensors is that they are not significantly restricted by environmental conditions (light and rain). In fact we were testing the eNV200 platform in London during autumn and winter 2019 and we did not have to stop the project once due to rain or other poor weather conditions.

How do you find working with the Velodyne LiDAR sensors?

These units are overall very reliable. We never had an issue with a Velodyne sensor.

How is LiDAR making a difference to your projects?

We rely heavily on the LiDAR unit as it’s the main sensor our vehicles use to perceive the environment. Fusing the output with other visual sensors we are working towards a reliable perception system that provides our cars with visuals of their surroundings in three-dimensional space. I can see the future of the LiDAR sensors, in general, being more towards the direction of lowering the costs of these units so they can start being used by more robotics projects.

How have you found working with Mapix technologies?

The team at Mapix technologies have always been helpful. They are knowledgeable about the Velodyne range of LiDAR sensors and always provide our orders quickly and efficiently.

See more about how StreetDrone are using LiDAR for localisation here

See more about the Velodyne Puck and Velodyne Ultra Puck LiDAR sensors