Mapix technologies are proud to sponsor the Edinburgh University Formula Student (EUFS) team, a student club at the University. We have provided LiDAR equipment and mentoring by our CTO and founder Gert Riemersma since 2018. Now the team has a few years experience and success with their autonomous race cars, we asked them to share some of their key learnings with LiDAR.

The Formula Student competition

by Keane Quigley, and Matthew Whyte, with contributions from Angus Stanton, and Vivek Raja, all members of the EUFS team.

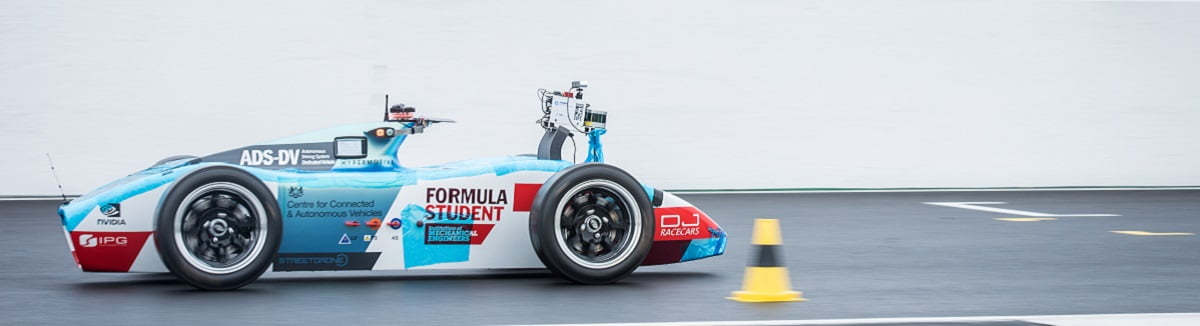

Formula Student is Europe’s most established educational engineering competition, now in its 22nd year. The competition aims to develop young engineers by providing real world experience in building a race car. Each year over 100 university teams from around the world travel to Silverstone. There they take part in a number of static and dynamic events. Since 2018, autonomous elements have been added to the competition. The University of Edinburgh team won these in both 2018 and 2019.

Over 100 Edinburgh University students from a variety of different departments are involved in the 2020 challenge. This year the team are building their first electrically powered autonomous race car.

EUFS car designs for 2020. The car on the left is the electric powered autonomous car, using the chassis from last years human driven, internal combustion engine car.

The ‘eyes’ of the car

We’re the Perception sub-team of Edinburgh University Formula Student. Our goal is to build a self-driving electric race car. This is for the Formula Student AI UK competition held at Silverstone in July annually. Our sub-team’s work is the backbone of the whole software stack. The car can’t do anything without its ‘eyes’. We’re going to talk about why we wanted to use a LiDAR. We will also give a brief description of our pipeline by highlighting problems we’ve faced over the years and how we’ve solved them.

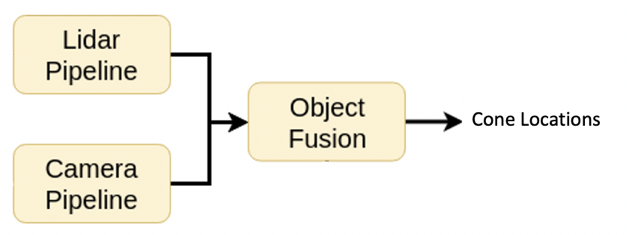

LiDAR and camera pipelines

To enable our autonomous car to see its surroundings, we initially looked at what is used in industry. We then examined how this could be applied to solve our problems. The first and obvious answer was to have cameras which are cheap, capable and produce a lot of data. However, cameras also have their limitations. They are bad at detecting how far away objects are. Also a few minutes of video footage can easily be tens of gigabytes. This necessitates greater storage and processing requirements. To address those concerns, we also decided to equip our car with a LiDAR sensor. LiDAR addresses some of the limitations of cameras. LiDAR has very accurate depth data for little space and processing requirements.

We’re fortunate that Mapix technologies (also based in Edinburgh) has generously sponsored us. They provided us with a Velodyne VLP-16 for the past two seasons which has played a key part in our perception pipeline.

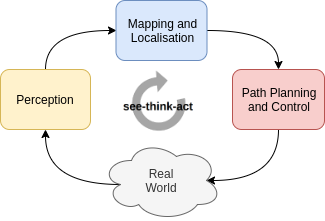

An overview of the perception pipeline

Fusing pipeline results together

We treat our LiDAR and Camera pipelines separately for sensor failure mitigation. Therefore, if one of the pipeline fails, we use the cone locations from the other pipeline. Otherwise we fuse results from both the pipeline to get the best of both worlds. Colour of cones on the track from the camera pipeline, and the location of cones from the LiDAR pipeline. If the camera pipeline fails, the LiDAR pipeline estimates the colour of cones. This is using a boundary estimation algorithm. Also if the LiDAR fails, the camera pipeline estimates depth using a stereo matching algorithm.

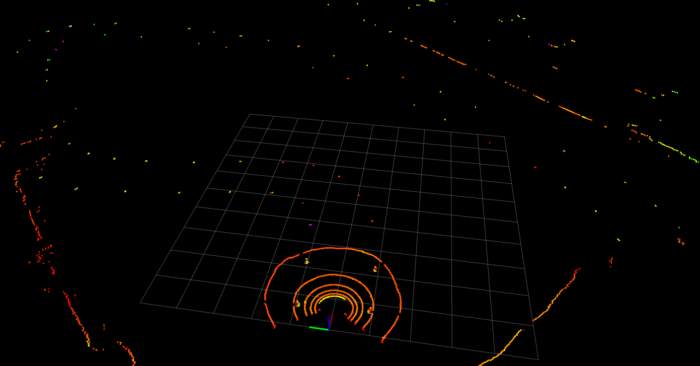

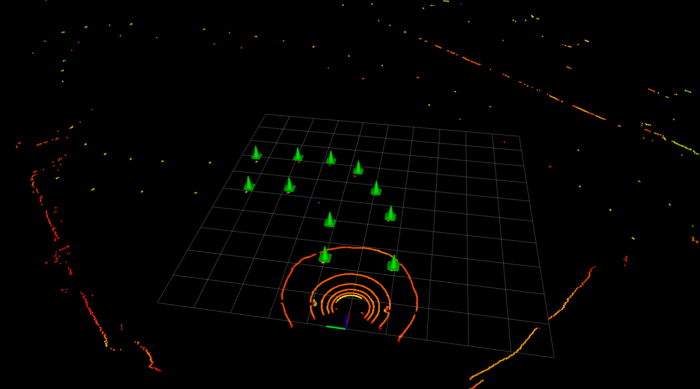

A raw LiDAR scan. 20m x 20m grid in front of the car (the RGB axes) for scale.

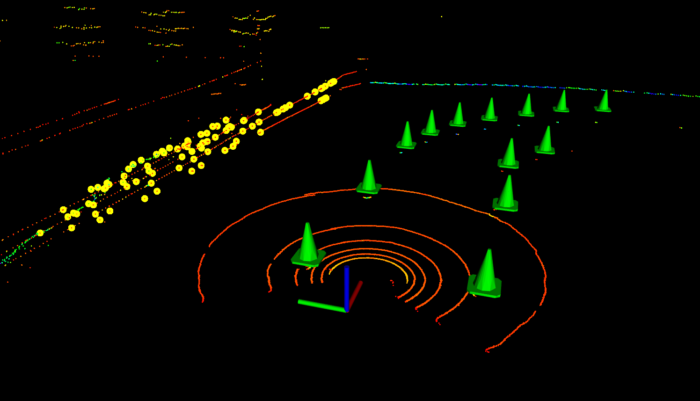

The results of the LiDAR pipeline

How the LiDAR pipeline works

We first trim our field of view by keeping only points in a 20m x 20m square in front of the car. The ground next to the car is then removed by segmenting the ground into sectors and each sector into bins. Then remove points a certain threshold above the bins. This proves extremely good in getting rid of uneven track surfaces. However the pointcloud still has too much data to be useful. The LiDAR returns a plethora of points from objects such as rubber tyres, barriers, and people around the track.

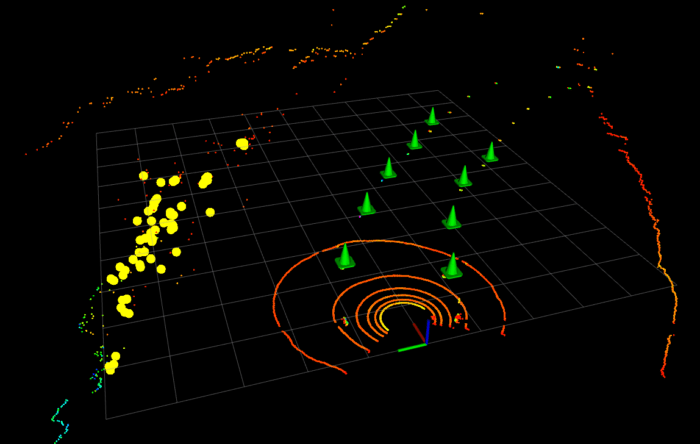

To solve this, we use Euclidean Clustering and a Nearest Neighbour Filter. We create clusters of points based on their euclidean distance to each other. We then apply a Nearest Neighbour filter which filters out areas where the concentration of cone clusters is higher than is expected.

Grass on the side of the track (shown by the yellow clusters) removed by euclidean clustering and the nearest neighbour filter

Barriers on the side of the track (shown by the yellow clusters) removed by euclidean clustering and nearest neighbour filter

Barriers on the side of the track (shown by the yellow clusters) removed by euclidean clustering and nearest neighbour filter

Finally, we used a grid-based object detection algorithm. Where, after processing the point cloud as above, we create a grid in front of the car divided into squares of equal size and fill up this grid based on where points are. We then classify cells in the grid as cones based on the pattern of cells in that area.

Continually improving

There’s more to be done! We’re currently working on correcting the motion distortion in LiDAR scans that arise from the car going faster than 8m/s, and on accumulating LiDAR scans as the pitching motion of the LiDAR while racing causes the LiDAR to ‘miss’ cones further away in some scans.

As seen in the video, the LiDAR pipeline allows us to very accurately estimate the locations of cones up to 20 metres in front of the car. In comparison to our camera pipeline whose depth estimation after 8 to 10 metres is too inaccurate to be used.

William the wheelchair

There’s another key ingredient that has allowed us to innovate and create a robust LiDAR object detection pipeline without having a car. In previous seasons, we’ve entered the software only part of the Formula Student AI competition and this will be our first season making our own electric self-driving race-car. William, our beloved disabled wheelchair. William was provided to us by Mapix technologies two seasons ago.

The electric wheelchair has allowed us to frequently test our software. We do this out in a car park at the University’s Kings Building campus over the weekends. William used to be an old test rig for the Mapix technologies team. He came with his chassis, wheels and batteries, and we’ve modified him out with a computer, sensors, and an emergency brake system.

Testing with William

Real life learning opportunities with Formula Student

On a more general note, student-led engineering projects like Formula Student provide a phenomenal opportunity for students to practice what they learn in classrooms and get a taste of what work after University is like. More importantly, given that we need to self-govern ourselves to plan, design, and engineer as a team, we learn to think and engineer in terms of constraints such as budgets, time, resources, motivation, etc.

We’d like to thank Mapix technologies for their generous support over the years. Our team has grown in size, maturity and ambition, and there’s no doubt in our minds that Mapix technologies has been an integral part of our growth, and has been key in enabling all of us to grow the way we have.

For more information on the Edinburgh University Formula Student team click here

For more information on the Velodyne Puck LiDAR sensor click here